I replicate our skills with machines to realize what does (not) really make us humans.

Contact me at [the domain of this website without dot com]@mit.edu

Since you’re here, go read my papers. Start with my latest one on LLMs (Italian version).

Bio. Currently, postdoc at MIT CSAIL with Daniela Rus, also working with Phillip Isola and Jacob Andreas. Previously, CS postdoc at the University of Oxford with Michael Bronstein. Involved in project CETI, where we think it’s time to understand what whales are saying to each other using machine learning. Mentor of the Orthogonal School within Elicsir, where we cultivate in Italy a new generation of talented computer scientists.

I received my PhD degree in Computer Science cum laude from the Sapienza University of Rome, with a thesis on “Artificial Scientific Discovery” (video). In Rome, I worked in the GLADIA research group, advised by Emanuele Rodolà. During my PhD, I also worked as an Applied Scientist Intern for the Amazon Lablet in Tübingen with Francesco Locatello, and I spent time with Alex Bronstein’s group at Technion in Haifa. I was also an ELLIS student.

Before starting my PhD, I graduated in Physics in 2016 (BSc), with a thesis about using neural networks to spot dark photons at CERN, advised by Stefano Giagu; and two years later in Computer Science (MSc), by defeating the Italian Othello champion with a cheap reimplementation of AlphaGo Zero from scratch, advised by Alessandro Panconesi. Along with Roberto Di Leonardo, he was also my tutor at the pivotal Sapienza School for Advanced Studies. In this period, I also worked as a Research Intern for Spiketrap with Andrea Vattani.

I am making a better Google Scholar with Bardh Prenkaj, Oscar Michele Norelli, and Francesco De Benedittis. The first stable release of BetterScholar is planned for early 2026, a beta version is already available at betterscholar.org. Read more here: Why BetterScholar?

“You insist that there is something a machine cannot do. If you will tell me precisely what it is that a machine cannot do, then I can always make a machine which will do just that!”. John Von Neumann (don’t miss The Maniac)

“Computers are way of instantiating abstract objects and their relations into physical objects and their motion”. David Deutsch (don’t miss The Beginning of Infinity)

What makes us humans?

I am interested in understanding what allowed humans to reach the Moon. What do we have that animals and machines are still missing?

Intelligence, agency, curiosity. I want to understand these traits to the point of being able to implement them in a machine. At long last, we have enough computing power to submit our models of human faculties to experimental evaluation.

This is a groundbreaking moment in science: AI is doing now what physics did centuries ago, reclaiming from philosophy the duty of explaining some fundamental aspects of reality, such as what knowledge is and how it is created.

Currently, I am obsessed with our use of symbols, especially with the mechanism through which humans attach meaning to new signs, such as when a redditor creates a new meme, or a scientist proposes a new theory. Do our large models of language actually encompass this mechanism?

I want to build machines capable of writing scientific articles about their own new ideas, pushing our knowledge forward.

Highlights of my research in this direction

- If LLMs hallucinations are due to a void of intention rather than a lack of factuality, can they ever legitimately discover new knowledge?

LLMs can hide text in other text of the same length, LockLLM at NeurIPS 2025 [arXiv] [code] [Twitter thread]. - The meaning was already there: connecting text and images without training a neural network to do so.

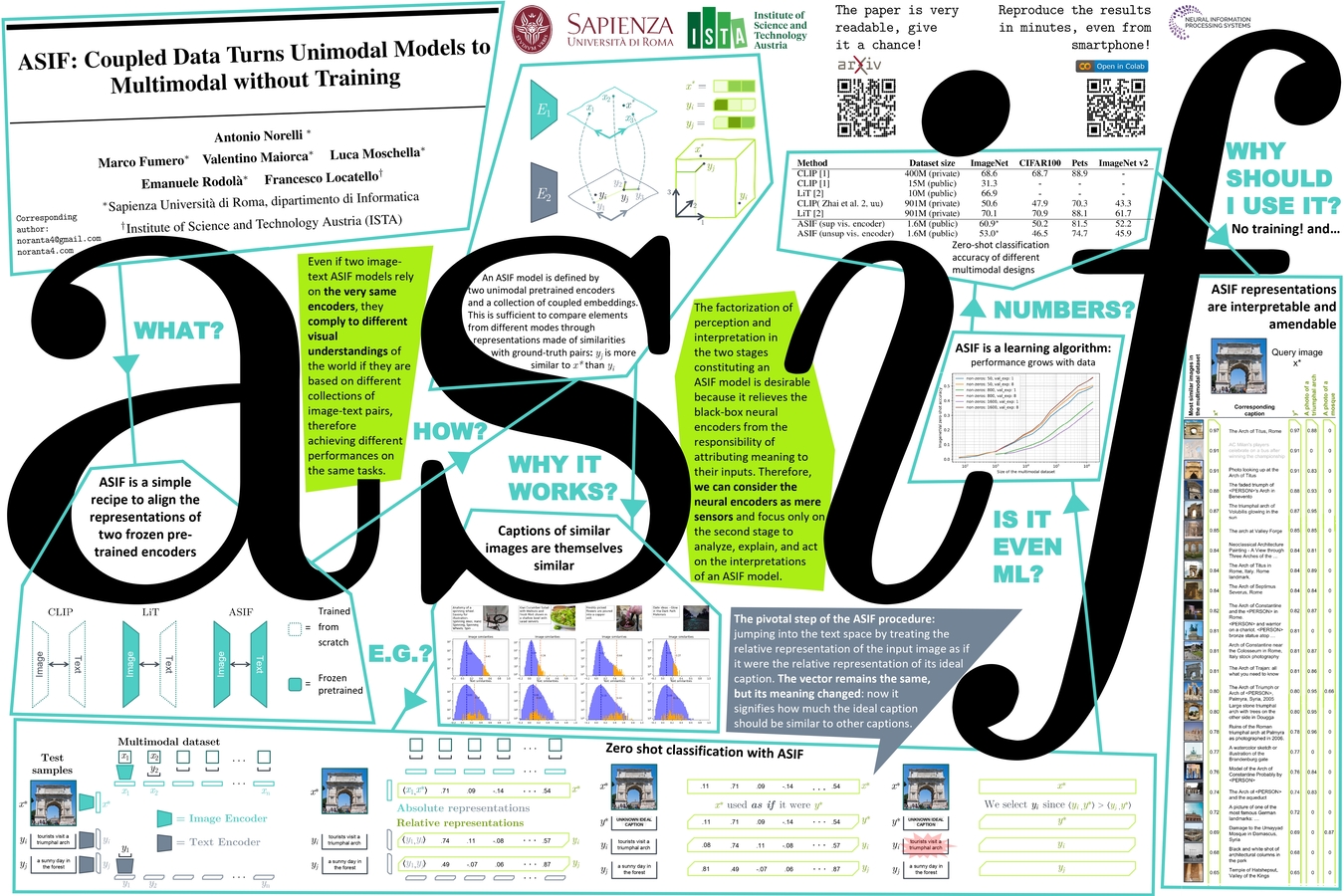

ASIF: Coupled Data Turns Unimodal Models to Multimodal Without Training, NeurIPS 2023 [arXiv] [code] [Twitter thread] [Alex Smola about this work][Poster ↓]. - A small model of an artificial scientist: mastering the game of Zendo with Transformers.

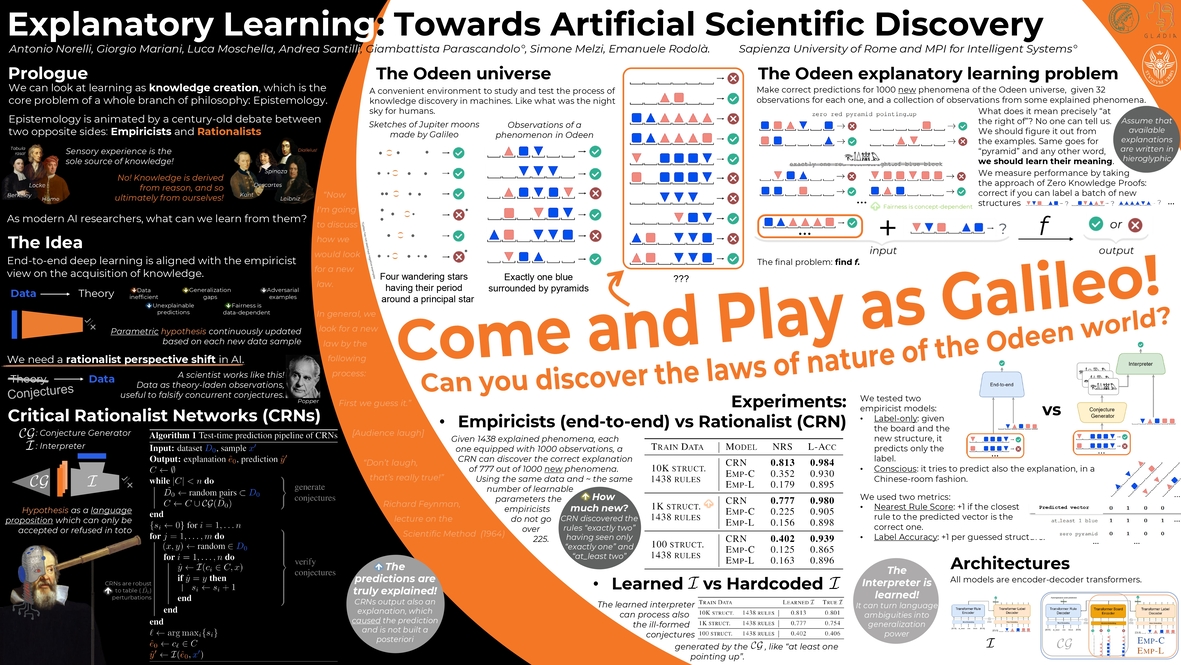

Explanatory Learning: Beyond Empiricism in Neural Networks, KLR workshop at ICML 2023 [arXiv] [code] [Twitter thread] [Judea Pearl about this work][Poster ↓].

Other featured research

- It happens that different neural networks trained on the same stuff learn intrinsically equivalent latent spaces.

Relative Representations Enable Zero-shot Latent Space Communication, ICLR 2023 [arXiv] [code] [Oral at ICLR23 with 8-8-10 reviews]. - AlphaGo Zero for Othello. With two ideas to speed up the learning, and tested in a live match against a former world champion.

OLIVAW: Mastering Othello without Human Knowledge, nor a Penny, IEEE ToG 2022 [arXiv] [Trailer of the match]. - With the right geometric prior, 11 samples are enough to train a generative model for 3D shapes of humans or animals.

LIMP: Learning Latent Shape Representations with Metric Preservation Priors, ECCV 2020 [arXiv] [code] [Oral at ECCV 2020 (2 min, 10min video)]. - The task with the widest gap between human and machine performance in BIG-bench, a collaborative effort to test Language Models.

Beyond the Imitation Game: Quantifying and Extrapolating the Capabilities of Language Models, TMLR 2023 [arXiv] [SIT task].

Check my Google Scholar profile for a complete list of my articles.

Selected Invited Talks during my PhD

- 12/06/2023 Simons Institute at the University of California, Berkeley (US 🇺🇸). Opening talk at the workshop Decoding Communication in Nonhuman Species II, organized by David Gruber, Shafi Goldwasser, and Michael Bronstein. Explanatory Learning: Unleashing New Knowledge in Unknown Languages [video recording]

- 15/02/2023 University of Cambridge, CS department (UK 🇬🇧). ASIF: Coupled Data Turns Unimodal Models to Multimodal Without Training

- 12/09/2022 Tokyo Institute of Technology, CS department (Japan 🇯🇵). From sound to metric priors: new paradigms for shape generation

- 09/06/2022 Quantum Photonics, ONG (Virtual 🌐). How to create an artificial scientist

- 17/12/2021 International Symposium “Giornalismo e disinformazione”, UniPa (Palermo, 🇮🇹). Unire i puntini: meraviglie e limiti dei moderni sistemi di IA

- 12/06/2020 Cassini Junior Workshop, French Embassy in Italy and SSAS (Rome 🇮🇹). Towards a human-level artificial intelligence

- 19/02/2019 Italian Association for Machine Learning, ML meetup (Rome 🇮🇹): The italian AlphaZero [360° clip]

My posters

Posters of my highlighted research papers, as they appeared in ICML 2023 and NeurIPS 2023. Click to see them in their full glory!

Advising

I enjoy mentoring younger students and coadvising them on their thesis. If you are super passionate about AI and looking for what to do next or for a thesis (maybe an AI agent for a board game involving a cool challenge?) feel free to reach out!

Students I had advised on their BSc/MSc thesis:

- Robert Adrian Minut, MSc, Backward LLMs (Now PhD at Sapienza)

- Alessandro Zirilli, BSc, AlphaZero for Hex

- Ahmedeo Shokry, MSc, AI and Feynman Diagrams (Now PhD at École Polytechnique)

- Giovanni Quadraroli, BSc, DeepRL for Space Invaders

- Guido Maria D’Amely Di Melendugno, MSc, DL for Contract Bridge (Now PhD at Sapienza)

News

- 10/10/2025 📍 Moving to the the US 🇺🇸 to start a new postdoc at MIT CSAIL in Boston, Massachussets, to work with prof. Daniela Rus, Phillip Isola, and Jacob Andreas.

- 17/11/2024 👨🏫 I was in Bertinoro 🇮🇹 to launch the yearly project for the students of Scuola Ortogonale, an excellence degree for CS master students in Italy, where I was invited to be a mentor.

- 13/11/2024 💬 All-team CETI meeting in Dominica 🇩🇲 to finally meet in person all the cool people working in the Biology, Robotics, Theory, and ML teams

- 06/11/2024 📍 End of my visit to Daniela Rus’s lab at MIT CSAIL, I enjoyed Boston 🇺🇸 for one month working on CETI and uncertainty in LLMs, stay tuned!

- 26/06/2024 🎤 Invited talk at BAIR (US 🇺🇸), hosted by the Stuart Russell’s Group: Explanatory Learning: Towards Artificial Scientific Discovery

- 09/05/2024 📏 I organized the social “Your new Scholar profile” at ICLR 2024 in Vienna 🇦🇹, contextually I launched BetterScholar with Bardh Prenkaj. Read more here: Why BetterScholar?

- 26/02/2024 📍 Moving to the UK 🇬🇧 to start a postdoc at Oxford University, to work with prof. Michael Bronstein on the CETI project.

- 26/01/2024 ⭐ I successfully defended my thesis on Artificial Scientific Discovery, and was awarded the PhD degree in computer science cum laude, you can watch it here! 😎

- 15/12/2023 📏 Served as panel chair at the UniReps Workshop at NeurIPS 2023. We talked about unifying representations in neural models with Andrew Saxe, Sophia Sanborn, and Andrew Lampinen.

- 24/11/2023 🎤 Nostalgic invited talk at the Physics department of Sapienza (Rome, Italy 🇮🇹), hosted by Federico Muciaccia: Explanatory Learning: Towards Artificial Scientific Discovery.

- 22/09/2023 🎤 Invited talk at CENTAI (Turin, Italy 🇮🇹), hosted by the wonderful group of Giovanni Petri: Explanatory Learning: Towards Artificial Scientific Discovery.

- 21/09/2023 📰 Two papers accepted at NeurIPS 23: ASIF: Coupled Data Turns Unimodal Models to Multimodal without Training and Latent Space Translation via Semantic Alignment, see you in New Orleans! 🐊

- 28/07/2023 📰 Presented our poster at the KLR workshop at ICML (Honolulu, US 🇺🇸): Explanatory Learning: Towards Artificial Scientific Discovery

- 17/07/2023 🎤 Invited speaker at the workshop il Futuro Annunciato, to show the golden path of Computer Science to young students from physics and other hard sciences. (Rocca Sinibalda, Italy 🇮🇹)

- 14/07/2023 📍 Back in Rome from Haifa: end of my visit at Technion.

- 15/06/2023 💬 Invited research visit to the Stanford NLP group (Palo Alto, US 🇺🇸) hosted by Federico Bianchi to talk about ASIF.

- 14/06/2023 🎤 Invited talk at BAIR (US 🇺🇸), hosted by the Berkeley NLP Group: Explanatory Learning: Unleashing New Knowledge in Unknown Languages.

- 12/06/2023 🎤 Invited talk at the Simons Institute at the University of California, Berkeley (US 🇺🇸), for the workshop Decoding Communication in Nonhuman Species II, organized by David Gruber, Shafi Goldwasser, and Michael Bronstein. Explanatory Learning: Unleashing New Knowledge in Unknown Languages [video recording]

- 05/05/2023 🎤 Invited speaker at the panel Intelligenza artificiale: il racconto del cinema tra fantascienza e scenari of the 2023 Catania Book Festival (Catania, Italy 🇮🇹).

- 18/03/2023 📍 Moving to Haifa (Israel 🇮🇱) to start a research visit at Technion to work with Alex Bronstein on multimodal models.

- 21/02/2023 💬 Invited research visit to Deepmind (London, UK 🇬🇧) hosted by Marc’Aurelio Ranzato to talk about ASIF and RelReps.

- 20/02/2023 💬 Invited research visit to the University of Oxford (UK 🇬🇧) hosted by Michael Bronstein to talk about Explanatory Learning, ASIF, and RelReps [Emanuele giving the talk].

- 16/02/2023 🎤 Invited talk at Autodesk London (UK 🇬🇧) hosted by Hooman Shayani: ASIF: Coupled Data Turns Unimodal Models to Multimodal Without Training.

- 15/02/2023 🎤 Invited talk at the University of Cambridge (UK 🇬🇧) hosted by Pietro Liò: ASIF: Coupled Data Turns Unimodal Models to Multimodal Without Training.

- 14/02/2023 💬 Invited research visit to Imperial College London (UK 🇬🇧) hosted by Fabrizio Frasca to talk about ASIF and RelReps. [Luca’s talk before mine].

- 13/02/2023 💬 Invited research visit to University College London (UK 🇬🇧), hosted by Andrew Saxe to talk about RelReps. [Lunch selfie]

- 07/02/2023 💬 Invited research visit to BOSCH AI (Renningen, Germany 🇩🇪) to talk about applications of our latest work Relative Representations Enable Zero-shot Latent Space Communication (RelReps).

- 02-03/2023 👨🏫 Teaching assistant of the 2023 Deep Learning & applied AI course in Sapienza CS master degree. I was responsible for the first lab sessions (5 hours).

- 21/01/2023 📰 Relative Representations Enable Zero-shot Latent Space Communication accepted as notable-top-5% at ICLR-2023.

- 04/10/2022 📰 ASIF: Coupled Data Turns Unimodal Models to Multimodal Without Training is now on arXiv!

- 20/09/2022 🎤 Invited speaker at the symposium Saremo assimilati? Meraviglie, trappole e limiti dell’intelligenza artificiale, organized by the italian Order of Journalists (Cagliari, Italy 🇮🇹).

- 12/09/2022 🎤 Invited talk at the Tokyo Institute of Technology (Tokyo, Japan 🇯🇵) hosted by Asako Kanezaki: From sound to metric priors: new paradigms for shape generation

- 06/09/2022 📰 Errare humanum est? a pilot study to evaluate the human-likeness of a AI othello playing agent published at IVA 22

- 05/09/2022 📰 Presented our poster at the ml4evolang workshop at the JCoLE conference (Kanazawa, Japan 🇯🇵): Learning to make sense out of ambiguous messages leads to language evolution, a simulation

- 19/07/2022 🎤 Invited talk at DataScienceSeed (in italian, virtual): Explanatory Learning: può una macchina imparare a formulare teorie?

- 15/07/2022 📍 Back in Rome from Tübingen: end of my internship at Amazon (internship project: ASIF)

- 09/06/2022 ⭐ Our Symbol Interpretation Task in BIG-bench is the one with the widest gap between human and machine performance

- 09/06/2022 🎤 Invited talk at Quantum Photonics (virtual): How to create an artificial scientist

- 27/04/2022 👨🏫 Guest lecture for the Computer Science course in Sapienza Deep Learning and applied AI, titled Towards an artificial scientist.

- 08/03/2022 📰 OLIVAW: Mastering Othello without Human Knowledge, nor a Penny published on IEEE Transactions on Games

- 25/01/2022 📰 Explanatory Learning: Beyond Empiricism in Neural Networks is now on arXiv! Twitter thread

- 15/01/2022 📍 Moving to Tübingen (Germany 🇩🇪) to start a research internship at Amazon Science where I will work with Francesco Locatello.

- 17/12/2021 🎤 Invited speaker at the International Symposium Giornalismo e disinformazione at UniPa (Palermo, Italy 🇮🇹), panel Umano, troppo umano? Intelligenza Artificiale e disinformazione

- 08/11/2021 📏 Organizer of the Sapienza CS Doctoral Workshop 2021 in the fortress of the medieval village of Bertinoro (Italy 🇮🇹), a four-day summit of talks, activities, and networking.

- 02-05/2021 👨🏫 Teaching assistant of the 2021 Deep Learning & applied AI course in Sapienza CS master degree. I was responsible for the lab sessions (20 hours), this year we added a tutorial on Transformers, I curated the first part. I coauthored midterm and written exams also this year.

- 13/11/2020 🎤 Contributed talk at AAAI Fall Symposium on Abstraction and Analogy in AI (virtual): The value of a rationalist approach in AI

- 12/06/2020 🎤 Invited talk at Cassini Junior Workshop, organized by French Embassy in Italy and SSAS (Rome 🇮🇹): Towards a human-level artificial intelligence

- 27/03/2020 📰 LIMP: Learning latent shape representations with metric preservation priors accepted as oral at ECCV-2020.

- 26/02/2020 🎤 Contributed talk at Technion - Israel Institute of Technology (Haifa, Israel 🇮🇱) Learning deformable style transfer via differentiable intrinsic distances

- 02-05/2020 👨🏫 Teaching assistant of the 2020 Deep Learning & applied AI course in Sapienza CS master degree. I was responsible for the lab sessions (20 hours), coauthoring 10 tutorials and the written exams.

- 01/11/2019 📍 Started my PhD at Sapienza University of Rome advised by prof. Emanuele Rodolà

- 19/02/2019 🎤 Invited talk at the ML meetup from the Italian Association for Machine Learning (Rome 🇮🇹): The italian AlphaZero